What is Docker?

Docker is a software platform that allows you to quickly compile, test and distribute your applications. Docker packages the software into standardized units called a container, which contains everything necessary for the software to run, including libraries, system tools, code, and runtime. Using Docker, you can quickly deploy applications to any environment, scale applications, and make sure your code will work.

Running Docker on AWS provides developers and administrators with a highly reliable, cost-effective way to create, send, and run distributed applications of all sizes. AWS supports both Docker licensing models: open source Docker Community Edition (CE) and subscription-based Docker Enterprise Edition (EE).

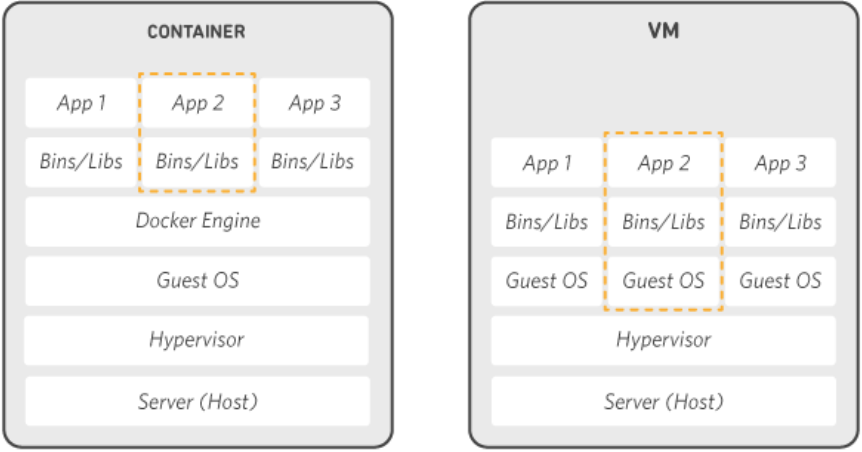

Docker’s virtualization structure does not have a Hypervisor layer unlike known virtual machines (VirtualBox, Vmware etc.). Instead, it accesses the host operating system through the Docker Engine and uses the system tools shared. Thus, it consumes fewer system resources than conventional VMs.

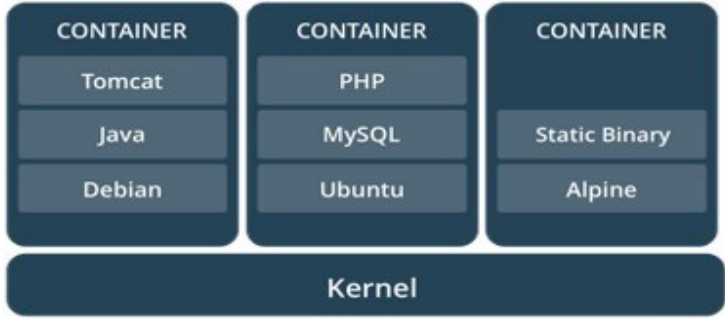

Docker is built on the LXC virtualization mechanism. A Docker image is operated in units called containers. Each container uses a process. Depending on their power, thousands of Docker containers can work on one machine. Container images share system files that are common. Therefore, disk space is saved. As seen in the figure, application containers use common bin (exe) and libraries. However, in classical virtual machine systems, a separate operating system and library files have to be reserved for each application.

Docker’s birth story

If we need to start with a classic definition, Docker is the most used software container platform in the world. Containerization means putting it in a container. Docker is a technology built on Linux Containers (LXC) that was added to Linux Kernel in 2008. LXC provides Containers (Linux-based systems) that work in an isolated form on Linux in the same operating system. As it turns out, LXC offers a virtualization infrastructure at the operating system level. Processes run by the same operating system in containers are provided by LXC to make them think as if they are running only in the operating system. LXC provides the functions of the file system, environment variables (Environment Variable), etc. offered to the Containers by the operating system, for each Container. Despite working in the same operating system, Containers are isolated from each other and cannot communicate with each other unless requested. One purpose of communication restriction is to protect the security of Containers against other Containers on the same Host. We will come to the point of how to turn all these features into useful functions. Let’s continue with some more LXC, Docker and classic virtualization.

The transition from LXC to Docker

Docker is based on the rich heritage of LXC but has skillfully packaged manual operations in LXC to standardize them. Docker has reduced the extensive functions and configurations offered by LXC to the public, so to speak. The most important feature of Docker is that it defines the structure of the Container with its text-based Image format (we will discuss the details later). In this way, containers with the same features can be easily created using the same Image format, shared with other people through the Docker Registry and easily expanded. Since the updating of the Image will only affect certain layers in the changes that will occur in the Image because the images are organized in layers, the costs of updating the images are at a minimum level. Another of Docker’s main differences with respect to LXC is that Docker forces its users to run one and only one process in the same Container. Many of us have seen the caps below. There is a child who claims that he solved the bug by deleting the code. In fact, something similar to Docker is doing here for me. Docker has achieved a more usable and more useful structure by narrowing the wide and difficult to use functions offered by LXC to the user. Running a single process instead of multiple processes of the same Container enables containers to be used repeatedly, easily, extensively and in a more understandable way. We will exemplify this section in detail in the following blogs, as a small example, for now, let’s assume that the system we will create is a web server, an app server and a database server. The structure proposed by Docker is to put these three components into separate Containers instead of putting them in a single Container.

How does Docker work?

Docker works by providing a standard way to run your code. Docker is an operating system for containers. Similar to a virtual machine virtualizing server hardware (eliminating the need to manage directly), containers virtualize a server’s operating system. Docker is installed on each server and provides simple commands that you can use to create, start, or stop containers.

AWS services such as AWS Fargate, Amazon ECS, Amazon EKS and AWS Batch make it easy to run and manage Docker containers at the appropriate scale.

Why use Docker?

Using Docker improves resource utilization, allowing you to send code faster, standardize implementation processes, move code smoothly, and save money. With Docker, you have one object that can work reliably anywhere. Docker’s simple and easy-to-understand syntax gives you full control. The wide adoption of Docker means that there are a powerful vehicle ecosystem and applications ready to be used with Docker.

When should Docker be used?

You can use Docker containers as a basic building block to create modern applications and platforms. Docker makes it easy to build and run distributed microservice architectures, deploy your code with standardized continuous integration and delivery processing lines, build highly scalable data processing systems, and create fully-managed platforms for your developers.

Running Docker on AWS

AWS supports both open source and commercial Docker solutions. There are several ways to run containers on AWS, including Amazon Elastic Container Service (ECS), a highly scalable, high-performance container management service. AWS Fargate for Amazon ECS is a technology that allows you to run containers in production without having to deploy or manage infrastructure. Amazon Elastic Container Service for Kubernetes (EKS) makes it easy to run Kubernetes on AWS. AWS Fargate for Amazon ECS is a technology that allows you to run containers without having to supply or manage servers. Amazon Elastic Container Registry (ECR) is a highly accessible and secure private container store that makes it easy to store and manage your Docker container images, to encrypt and compress them so that pending images can be captured quickly and secure. AWS Batch allows you to run highly scalable batch processing workloads using Docker containers.

Terminology

Since Docker is a brand new and Linux-based technology, both its terms and some Linux terms will be a little unfamiliar to many of us at first glance, but you will warm up quickly.

Docker Container

It is the name given to each of the processes run by Docker Daemon in the Linux kernel as isolated from each other. If we compare Docker to Hypervisor in the Virtual Machine analogy, the equivalent of each operating system (virtual server) currently running on the physical server is the Container in Docker. Containers can be launched within milliseconds, paused at any time, completely stopped and restarted.

Image and Dockerfile

A text-based Dockerfile (exactly like this, what dockerfile is), which determines the operating system or other Image, the structure of the file system and the files in it, the program (or sometimes not preferred programs) to be run by the Containers to be run with Docker Daemon. neither DockerFile nor DOCKERFILE) is the name given to the binary.

In the first episode where we talked about the birth of Docker, we said that Docker made a move that changed the game and that it functioned according to LXC and thus succeeded. Here, Docker requires that each Image that forms the skeleton of the Containers to be run must be identified with a Dockerfile. In this Dockerfile, it is explicitly given which Image is based on Image, which files it contains, and which parameters it will run with which parameters. We will discuss the content of Dockerfile and creating a new Dockerfile and therefore a new Image in detail rather than in the next blog. This explanation is sufficient for the purposes of this blog.

Docker Daemon (Docker Engine)

It is exactly the equivalent of Hypervisor in the Docker ecosystem. It replaced the LXC in the Linux Kernel. Its function is to provide the necessary help and abundance, that is the environment, for Containers to work in isolation from each other, as described in the Images. This is the section where all the complex (close to the operating system) jobs are handled by Container’s entire lifecycle, file system, CPU and RAM limitations, etc.

Docker CLI (Command-Line Interface) – Docker Client

It provides the necessary set of commands for the user to speak to Docker Daemon. It is responsible for downloading a new Image from the Registry, removing a new Container from Image, stopping the running Container, restarting it, assigning processor and RAM limits to the Containers, and delivering the commands to the Docker Daemon.

Docker Registry

A feature that makes Docker, which is already a technological wonder, more usable and valuable is that it encourages sharing and puts it at the centre of the business like all open source systems. At DockerHub, the images produced by the community can be downloaded free of charge and unlimited, new images created can be uploaded either openly (free) or closed-source (paid) for community or personal or company reference, and can be downloaded later. In addition to DockerHub, which serves in the Cloud, there is also the Private Registry service offered by Docker for those who want to keep their image in their own Private Cloud.

Containers are created from Images. Images occur as a result of a common effort and are kept in Docker Registry. For example, the manufacturer of Ubuntu maintains the Official Repository at Canonical DockerHub and publishes Ubuntu versions in this repository. People who want to create a Container using Ubuntu Image can directly use this Image. As a second usage scenario, it is possible to create an Image using this provided Ubuntu Image, for example, providing a static webserver service with Nginx, to publish an image on DockerHub, ie to make it available to both itself and others.

Docker Repository

It is the structure created by a group of Image. Different images in a repository are tagged by tagging so that different versions can be managed.

Classic VM vs Docker

VMs have a full operating system for each employee instance. Docker, on the other hand, uses images that are reduced in size instead of the full operating system and uses host operating system libraries as shared. However, this makes Docker the system resource-friendly and lowers the isolation level. With these, we can make the following comparisons.

Both virtualization approaches have advantages and disadvantages compared to each other. However, it would be correct to say that some advantages are very critical from the perspective of Docker.

One of them is easy to work. Docker Hypervisor runs in seconds because it doesn’t use a fully loaded operating system and the host works close to the system. Another is its predisposition to versioning. One of the most striking features of Docker is its versioning feature. Docker allows us to record different versions of the operating system images it uses. This situation opens the door for sharing the prepared images among users. If we talk about another feature, this is shareability. Operating system images prepared by users or distributors can be sent to central servers and can also be obtained from other central servers by other users.

The operation of containers in a single operating system brings the security problem to mind. Docker brought software solutions to this issue. Applications running in the container cannot see and affect the application in another container unless stated otherwise, in other words, they are isolated.