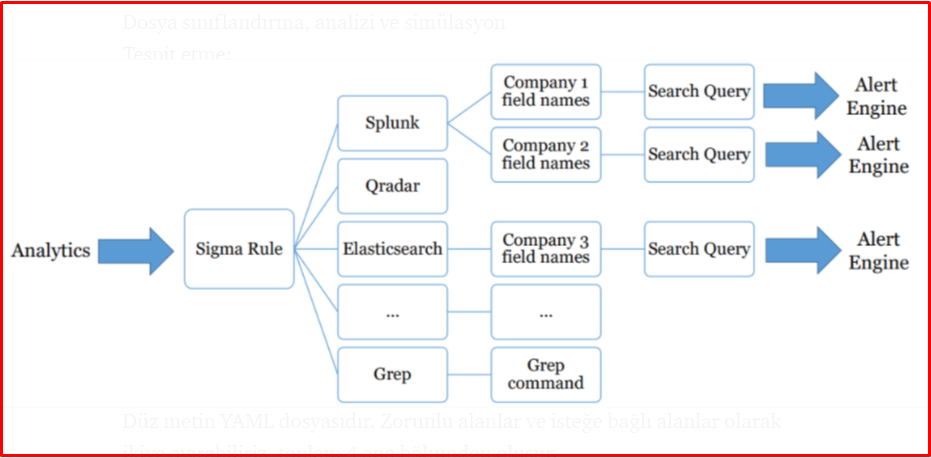

Sigma is a signature format for defining Log events in a flexible and easy way. It is a new project because the format can be applied to all logs, flexible and easy to write. The aim of this project is to create a structured rule format in which researchers and analysts can define perception methods and share them with others.

Generally, everyone collects Log data for analysis, collates them, and processes the rules with the required SIEM or Log collection products. However, there is no open format such as YARA rules in which to share work with others. Sigma supports us in this regard.

Sigma rules can be applied to many platforms. Sigma rules support the following platforms.

- Kibana

- Elastic X-Pack Watcher

- LogPoint

- Windows Defender Advanced Threat Protection (WDATP)

- Azure Sentinel / Azure Log Analytics

- ArcSight

- Splunk (Dashboard and Plugin)

- ElasticSearch Query Strings

- ElasticSearch Query DSL

- QRadar

- PowerShell

- Grep with Perl-compatible regular expression support

- Qualys

- RSA NetWitness

Writing Rules with Sigma

Sigma is very easy to use because Sigma uses YAML format, we need to work with a compiler that supports YAML. There is no rule definition with YARA and it also requires a compiler. We will go through the Webstorm compiler, which is the Yaml plugin, you can use VSCode or Atom if you want.

Download the Sigma repo from the link below.

https://github.com/Neo23x0/sigma

Open the project with any compiler and go to the “rules” directory. There are sample rules in this section. You can edit them or write rules from scratch. Let’s start to explain the rule structure below. While explaining the rule structure, we will first use one of the sample rules.

As can be seen below, the rule structure is quite simple and straightforward. We will examine the parameters of the rule and their values.

- Title and Logsource: You can specify its status, description and references in the field Title. In the Logsource field, you can define the product (windows, Symantec), category (proxy, firewall), service (SSH, PowerShell), etc. Log Source consists of category, type, brand, product and service.

- Description: Detailed information about what the rule does.

- Condition: The part that deals with how the rule will be processed on platforms. In the field, logic is specified for rule matching, and, or, pipe, count, max, avg etc.

condition: selection

-selection | count(dst_port) by src_ip > 10

-selection | count(dst_ip) by src_ip > 10

- Author: The person who wrote the rule.

- Category: Which category the rule belongs to (such as web server or apt)

- Product: If purchased from any product, this can be written. (Such as Apache, Windows, Unix) Service.

- Date: The date the rule was created.

- Level: The criticality level of the rule should be written. Low, Medium, High, Critical.

- Status: Indicates the status of the rule and takes three different values.

- Stable: The rule is considered stable and can be used across platforms.

- Test: The rule, most of which are finished, the last tests are carried out

- Experimental: These are the rules that are not recommended to be used in the preparation phase.

- References: Keyword references can be written in this section.

- Metadata: It consists of the title, status, description, references and tags.

- Detection: It is the field that enables the detection of indicators in our rule and generating logs in vehicles.

detection:

keywords:

-'rm *bash_history'

-'echo "" > *bash_history'

- Keywords: Alerts are the indicators that will be produced, that is, keywords with characteristic features of the situation, which is the purpose of writing the rule.

Creating a Sigma Rule

-

Robots.txt Visit Detection

Our first rule is to assume that we have a web service running on Apache. Here, we want an alert to be generated when the robots.txt page is entered. Since robots.txt is visited by bots, it will generate FalsePositive. We made our definition as seen below. The important thing here is the Detection area, which will determine the effectiveness of our rule.

title: Apache Robots.txt Detection

description: When a request is made to the Robot.txt page via URL Strings, the necessary alert will be generated.

author: Ömer ŞIVKA

logsource:

catagory: webserver

Product: Apache

detection:

keywords:

- '/robots.txt'

condition: keywords

fields:

- url

level: low

Testing Sigma Rules

We will use the “sigmac” tool to test the rules we have written. It is included in the vehicles section of the Vehicle Sigma project.

In order to use this tool developed with Python, we first install the necessary sigma tool.

wget https://github.com/SigmaHQ/sigma/archive/master.zip

unzip master.zip

Converting the Rule with Sigmac

It converts the rules we have written with the Sigmac application in the tools directory, in accordance with the platform to be used. Python3 is required for use. You can see the instructions for using the sigmac tool with the command below.

python sigmac -h

There are multiple ways to convert the rule. These;

Transforming the Single Rule

With the -t parameter, it selects the target platform to be translated. With the -r parameter, you can specify the path of the rule to be translated as recursive.

sigmac -t splunk -r /home/kali/sigma-master/rules/web/web_webshell_keyword.yml

Converting the Ruleset

If we want it not to show the errors on the backend during the conversion process, we can use the -I parameter. With the –r parameter, we can specify the path of the rule to be converted as recursive.

sigmac -t splunk -r /home/kali/sigma-master/rules/web/

Converting Rule with Custom Config

We can specify the path to our config file with the -c parameter. Generally, the rules do not work without specifying a config file, so you can use the config files in the tools directory during the conversion phase.

sigmac -t splunk -c /home/kali/sigma-master/tools/config/splunk-windows.yml -r /home/kali/sigma-master/rules/web/web_webshell_keyword.yml

Converting Rule with General Config File

If we explain the following command, ElasticSearch was selected as the target to be converted (-t es-qs), then the sysmon config file, which provides general use, was selected while specifying the config file and it was aimed to convert the process_creation rule for Windows.

sigmac -t es-qs -c /home/kali/sigma-master/tools/config/sysmon.yml -r /home/kali/sigma-master/rules/windows/process_creation

Uncoder.IO

It is a tool that allows you to translate SIEM-specific saved searches, filters, queries, API requests, correlation and Sigma rules online to assist SOC Analysts, Threat Hunters and SIEM Engineers. Below we will transform the sigma rule that I have chosen as an example. After typing the sigma rule in the left field, you can convert it to the log type you want by choosing Qradar, Splunk or Arcsight in the right field.

You can convert it to log types as follows. The points to be considered are to choose your log source types. You will also need to evaluate the performance criterion since it will search as payload. You can access the Uncoder.IO site from here.

References:

https://www.sans.org/cyber-security-summit/archives/file/summit-archive-1544043890.pdf